Surgical Quality Assessment (SQA)

Endoscopic surgeries require specific psychomotor skills of the operating surgeon which directly impact the performance of a surgery. Due to its nature as high-performance and high-risk undertaking, surgeries are prone to human error. However, often errors and adverse events are subtle and may go unnoticed during the actual surgery, preventing the opportunity for improvement in future cases. Thus, it is important to perform surgical error analysis in order to investigate errors and adverse outcomes. This provides valuable feedback to improve learning and quality control and hence increases patient safety.

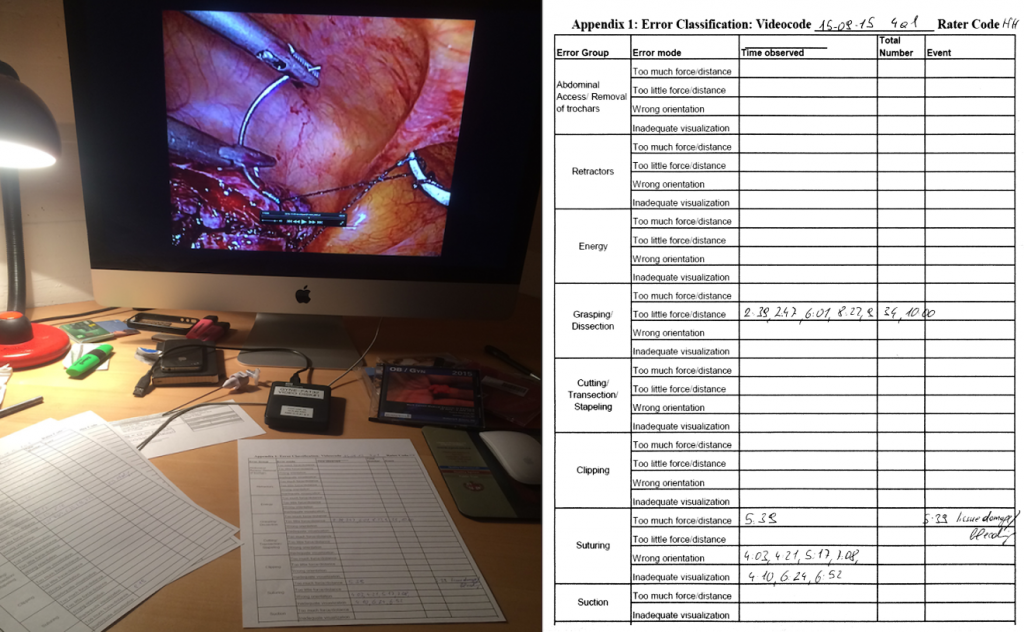

One way to perform surgical quality assessment (SQA) in an objective way is to analyze the unedited video footage from the surgery in accordance to some standardized rating scheme. The Generic Error Rating Tool (GERT) [1] is such an objective measure which defines four error modes (e.g., too much or too little use of force/distance) and nine generic surgical tasks (e.g., grasping and dissection, suturing, use of suction) that are prone to these errors. Currently, the medical expert who performs this analysis uses a common video player (with simple play, pause, fast-forward, and reverse controls) and manually fills in a form, either on paper or electronically. Besides the classification of the error according to its task group and error mode, the assessor has to write down the video timestamp of its occurrence and optionally further information such as free text notes. However, this is a tedious and error prone task. Due to its cumbersome nature, SQA is not widely used nowadays.

Our overall goal is to improve the efficiency of SQA through software support. Reducing the time and effort of this process helps to facilitate SQA. Thus, in a first step we want to speed up the entire process of manual SQA and make it more feasible. Secondly, we want to introduce semi-automatic SQA where software tools automatically analyze the video content and suggest segments that potentially include an error. These segments can then be further inspected by the medical expert. In a third step, we want to improve the quality of SQA itself, e.g., by an automatic detection of subtle technical errors that would be hard to recognize by a human assessor. In order to achieve these goals, we apply the following three fundamental technical methods: (1) semantics and knowledge modelling (2) automatic video content analysis and (3) appropriate video interaction features.

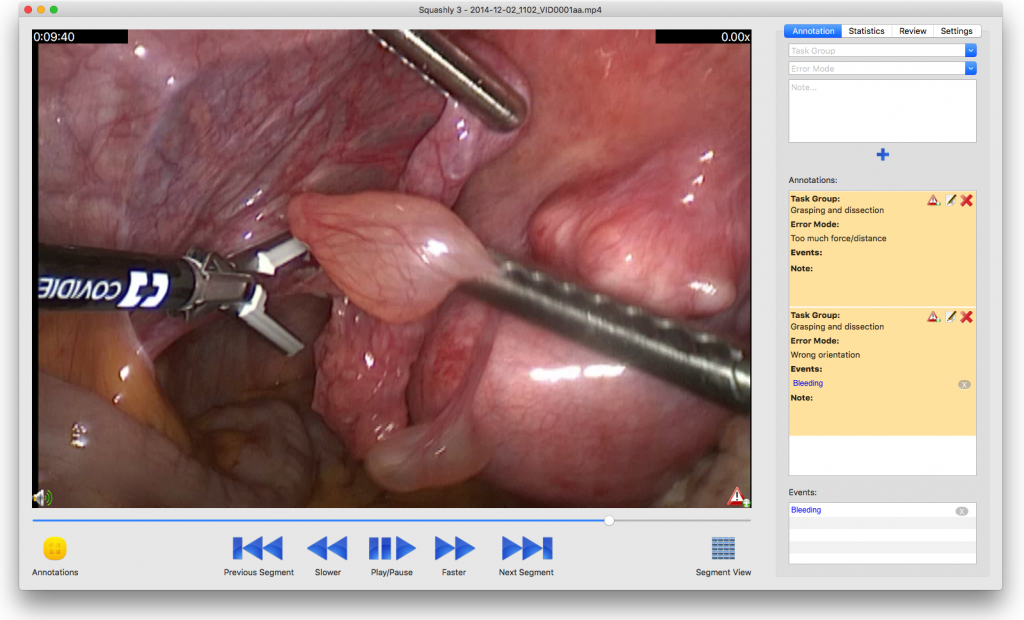

Together with a medical expert in surgical quality assessment, we developed a first prototype of a navigation and annotation tool. This prototype fulfills two purposes. On the one hand, it allows surgeons to more efficiently navigate through large video archives and to inspect and annotate video recordings based on standard methods such as GERT or OSATS [2]. On the other hand, this tool helps us to collect a set of annotated videos which can be used as a training set for automatic content classification using machine learning. Another important task is the provisioning of expert knowledge which may also be implicitly derived from data and interaction logging with this software tool.

An important future goal is to investigate semi-supervised learning approaches, such as Support-Vector-Machines (SVM) [3] and Convolutional Neural Networks (CNN) [4], for their achievable performance in automatic video content classification. In particular, we are interested in the achievable performance of automatic content suggestions for SQA. Additionally, we want to investigate whether other kinds of content classifications (e.g. Support Vector Machines with CNN features or dynamic content descriptors) work better than CNN-based classification for that purpose.

References

- E. M. Bonrath B. Zevin N. J. Dedy T. P. Grantcharov “Error rating tool to identify and analyse technical errors and events in laparoscopic surgery” British Journal of Surgery vol. 100 no. 8 pp. 1080-1088, 2013.

- J. A. Martin G. Regehr R. Reznick H. Macrae J. Murnaghan C. Hutchison M. Brown “Objective structured assessment of techn. skill (OSATS) for surgical residents” British Journal of Surgery vol. 84 no. 2 pp. 273-278, 1997.

- M. Hearst, S. Dumais, E. Osman, J. Platt, B. Scholkopf, “Support vector machines”, Intelligent Systems and their Applications 13 (1998), pp.18-28.

- A. Krizhevsky, I. Sutskever, G. E. Hinton, “Imagenet classifcation with deep convolutional neural networks“, in: F. Pereira, C. Burges, L. Bottou, K. Weinberger (Eds.), Advances in Neural Information Processing Systems 25, Curran Associates, Inc., 2012, pp. 1097-1105.